Research in Artificial Intelligence

We study the foundations and systems of machine learning, spanning optimization, theory, and large-scale models, with a focus on reliable real-world impact.

Research Directions

We investigate the fundamental theory of machine learning, design efficient and effective algorithms, and develop systems that reliably work in real-world settings.

Research Projects

Explore our cutting-edge research projects pushing the boundaries of AI

LMFlow

An Extensible Toolkit for Finetuning and Inference of Large Foundation Models. Large Models for All.

July 15, 2025

Learn more

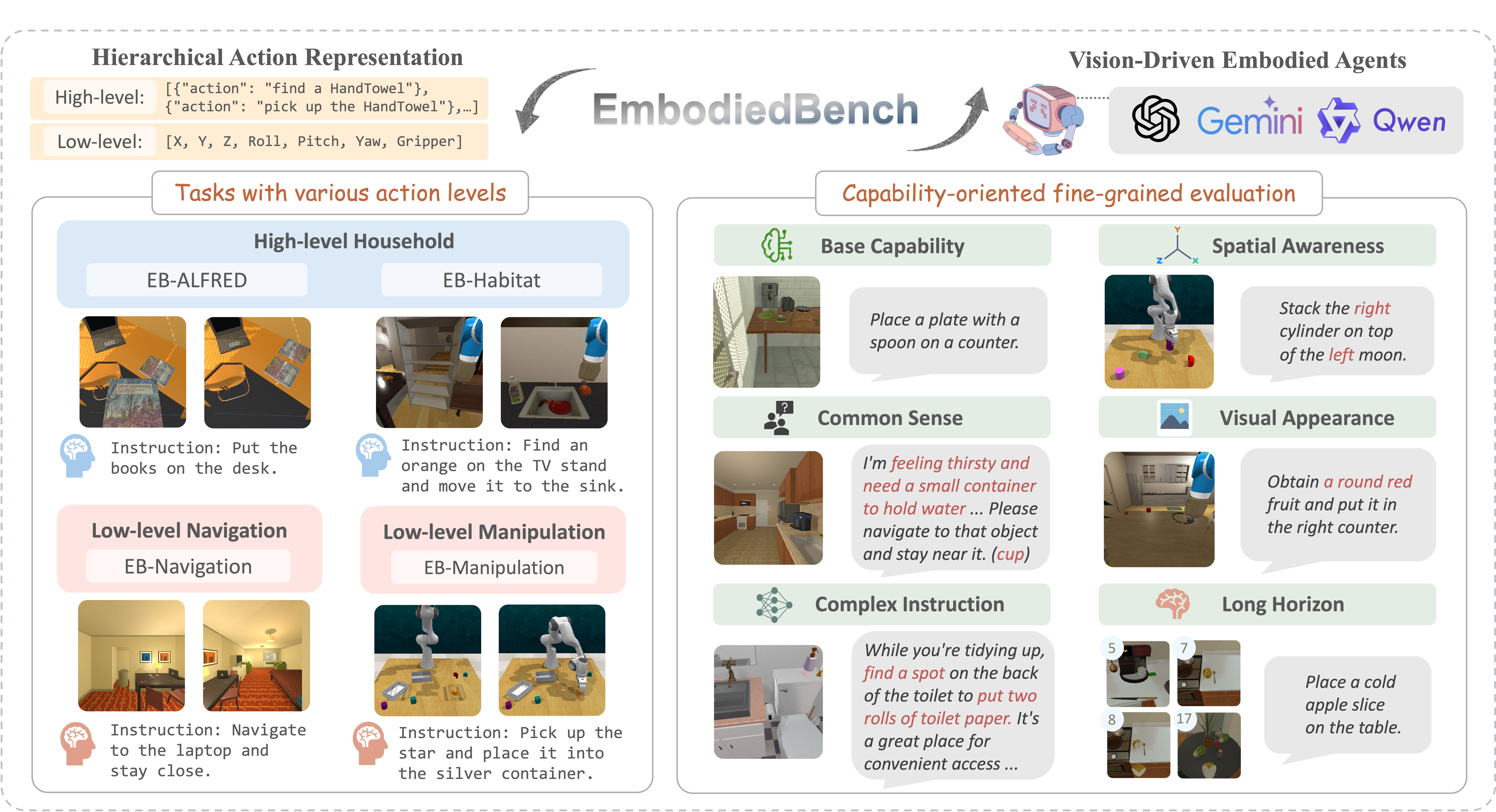

EmbodiedBench

A comprehensive benchmark for evaluating multi-modal large language models as vision-driven embodied agents across diverse environments and task complexities.

January 1, 2025

Learn moreLatest News

Stay updated with our recent achievements and announcements

Our Paper Accepted at NeurIPS 2025

Breakthrough research on LLM published at the leading AI conference

Read moreOur Team

Meet the brilliant minds driving innovation in artificial intelligence research

Faculty

Students